Welcome to the world of Hadoop: A small baby elephant : Big Data Assignment for Praxis Business School Part 1

Big Data has attracted the attention of lots of corporates, individuals,

big honchos in the field of analytics. Big data is a buzzword, or catch-phrase, used to describe a massive volume of

both structured and unstructured data that is so large it is difficult to process using traditional database and software

techniques.

A small baby elephant comes to the rescue by the name of hadoop.

Hadoop is a tool that helps solving the problem of processing large amount of data (Terabytes) by the combination of number of computers.

Before I proceed ahead with the objective in mind regarding this blog. We must understand two principles concepts of Hadoop:

a) HDFS: Hadoop Distributed File System distributes large files across multiple machines in a way that is invisible to the user

b) Map Reduce Concept: This is the crux of the hadoop. It can be broken down into two independent tasks map and reduce. Lets take a small example in a room there are colored balls . One needs to count the balls.Map program would identify the color and attach the postids on the table whereas the reduce program would add the no of postids.

I am analytics enthusiast that got a chance to work on the word count program on the hadoop platform. The blog would help as a simple guide how to run a simple word count program.

Word Count Program using Hadoop

1) Start Hortonworks Hadoop Data Platform(HDP) through Oracle Virtual Box

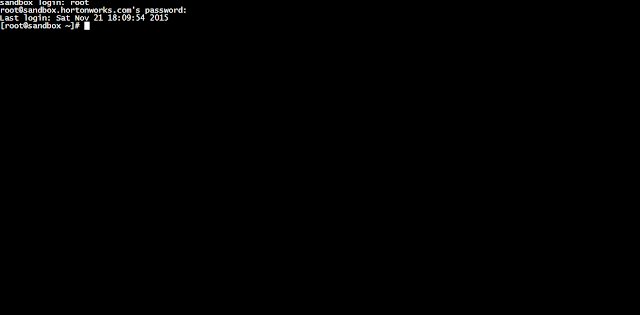

To start HDP provides an enterprise ready data platform that enables organizations to adopt a Modern data Architecture. It is a single node Hadoop cluster along with Pig, Hive and other applications . VirtualBox is a powerful x86 and AMD64/Intel64 virtualization product for enterprise as well as home use. Once you start the virtual machine the output is shown above

2) Open SSH terminal in virtual box

After this screen we need to go on the address stated to open the shell script.

After logging to this address http://127.0.0.1:4200/ one comes across the sand box is opened by password and user name. User name is root and hadoop

Look at the output below:

3) Create a directory for java program

To work around we need to know few commands in unix.

mkdir WC Classes

4) Copy the 3 programs of java

There are 3 program Sumreduce, Word Mapper and Word Count.Find Below the programs attached.

Sum Reducer Prog:

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class SumReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable totalWordCount = new IntWritable();

@Override

public void reduce(Text key, Iterable<IntWritable> values, Context context_W)

throws IOException, InterruptedException {

int wordCount = 0;

Iterator<IntWritable> it=values.iterator();

while (it.hasNext()) {

wordCount += it.next().get();

}

totalWordCount.set(wordCount);

context_W.write(key, totalWordCount);

}

}

Word Count Prog:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class WordCount {

public static void main(String[] args) throws Exception {

if (args.length != 2) {

System.out.println("usage: [input] [output]");

System.exit(-1);

}

Job job = Job.getInstance(new Configuration());

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setMapperClass(WordMapper.class);

job.setReducerClass(SumReducer.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setJarByClass(WordCount.class);

job.submit();

}

}

Word Mapper Prog:

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class WordMapper extends Mapper<Object, Text, Text, IntWritable> {

private Text word = new Text();

private final static IntWritable one = new IntWritable(1);

@Override

public void map(Object key, Text value, Context context_W) throws IOException, InterruptedException {

// Convert Text to String

String line = value.toString();

// Clean up the string - remove all non alpha characters

//line = line.replaceAll("[^a-zA-Z\\s]", "").toLowerCase();

line = line.replaceAll("[^a-zA-Z0-9\\s]", "").toLowerCase();

// Break line into words for processing

StringTokenizer wordList = new StringTokenizer(line);

while (wordList.hasMoreTokens()) {

word.set(wordList.nextToken());

context_W.write(word, one);

}

}

}

These programs can be copied by typing vi into the shell script and copy the programs by right clicking and selecting the option paste from browser.

5) Copy the following shell scripts for compiling and running of the java program

The code is given below:

6) Reflection of hadoop libraries in HDP distribution

The code is as below

hdfs dfs -rm -r /user/hue/wc-out2

hdfs dfs -ls/user/hue

hdfs dfs -ls/user/hue/wc

7) use Hue to upload the data files into an appropriate library and modify the shell scripts to point to correct input and output directories

The screen shots shows the job browser showing the success of the program and the creation of output file wcout and the last step I am attaching the screenshots for output

8)Output

Referances:

Hadoop Images Google

Hadoop Images Google

Thank you for sharing such a useful article. It will be useful to those who are looking for knowledge. Continue to share your knowledge with others through posts like these, and keep posting on

ReplyDeleteData Engineering Services

Advanced Data Analytics Solutions

Data Modernization Services

AI & ML Service Provider

Usually I do not read post on blogs, but I would like to say that this write-up very forced me to try and do it! Your writing style has been surprised me. Great work admin.Keep update more blog.Visit here for Product Engineering Services | Product Engineering Solutions.

ReplyDeleteI appreciate you taking the time and effort to share your knowledge. This material proved to be really efficient and beneficial to me. Continue to publish more articles on

ReplyDeleteLow Code App Development Company

Mendix Solutions

Software Testing Services

Test Automation Services

Product Engineering Services

I appreciate you taking the time and effort to share your knowledge. This material proved to be really efficient and beneficial to me. Continue to publish more articles on

ReplyDeleteLow Code App Development Company

Mendix Solutions

Software Testing Services

Test Automation Services

Product Engineering Services